Supercomputing and E-Science

Above all, modern science means dealing with large amounts of data and having the right scientific services, tools and infrastructure to accommodate that data. The Supercomputing and E-Science section collaborates with AIP scientists and their workgroups and projects. We ensure the application of FAIR principles, according to which the work processes for processing and publication of data collections use modern standards for metadata and the data itself is findable, accessible, inter-operable and reusable.

Collaborative research environments (CRE) comprise of efficient connections of supercomputing and storage facilities. Another focus of the section is data publication applying International Virtual Observatory (IVOA) standards and contributing to their development. Furthermore, the E-Science section is actively developing open source software for scientific application, especially data publication. The backbone of our scientific infrastructure is the Supercomputing and virtualization environment along with the cluster storage capacities.

Supercomputing, storage and virtualization infrastructure

AIP hosts several compute facilities for research calculations and data analysis. There are two compute clusters Leibniz and Newton with about 3,000 cores, located in both Leibniz and Schwarzschild buildings. The fast Infiniband interconnect between clusters is used for the data storage which is provided by multiple Lustre file systems. Approximately 4 PB of storage capacity is available for scientific data from observations and simulations. Each cluster has 0.5 PB storage for parallel I/O from the compute nodes. Some GPU computing facilities can be used for development and production runs. You can find a detailed user guide on clusters on the AIP internal pages.

In addition to clusters the E-Science team hosts the Compute Cloud infrastructure (CCI) based on ProxMox open-source virtualization solution. Currently 65 virtual machines are hosted in ProxMox with different tasks and profiles. Theses tasks include data analysis and data reduction pipelines, as well as Gitlab, CI and Mattermost services, or other webservices. The services and pipelines run inside separated virtual machines or docker containers. The isolated environments are increasing security and provide stable environments. The AIP backbone network is implemented with 10 GB switches enabling access to the big data collections for the Compute Clusters and the VMs.

In 2021/2022 with ERDE funds new Compute and storage facilities were added to the IT infrastructure of AIP.

Collaborative research environments (CRE)

International scientific collaborations involving AIP scientists are supported by COLAB, a browser-based software used to interface different kinds of data storage and compute resources, and a wide range of scientific programming and analysis environments. COLAB uses a virtualisation layer based on oVirt software, which provides efficient use of the institute’s hardware resources. COLAB is an advanced implementation of the “code to data” paradigm. Additional provision of GitLab, CI, and Mattermost provides a complete source code management system for modern code development, with version control and Continuous Integration for testing.

Furthermore, specialised CRE have been built for cosmology (CLUES, MultiDark, HESTIA), MUSEWise for the MUSE Collaboration, and Gregor for observers using the solar telescope GREGOR. They provide access for collaboration members to the huge data collections which are not already published and are being worked on. The analysis facilities of these CRE are customised for the respective collaboration.

Data Publication and Virtual Observatory (VO)

The Daiquiri software stack is used to for data publication and data services. Several data releases (DR) have been published using this framework, currently including the final RAVE DR6 of the RAVE survey, the three data releases of the photographic plate archive APPLAUSE , the Gaia Early Data Release 3 of the European Gaia satellite (AIP being one of 4 partner data centers), the MUSEWIDE survey, and many smaller data collections. The data can either be accessed via a browser based SQL query interface or via scripted access using the TAP Virtual Observatory standard, both astropy or TOPCAT are supported.

All published data collections carry extensive, VO compliant metadata and carry registered DOI (Digital Object Identifiers) to improve their findability and citeability. The VO standard for provenance of astronomical data has been developed and finished with essential input from the E-Science section, and a reference implementation is provided at the APPLAUSE site.

Apart from curating and publishing data, and in collaboration with the scientific working groups, the EScience provides assistance for crossmatching catalogs and application of machine learning methods.

The published research data collections are described in more detail at Research Data

Software development and community work

The Daiquiri software stack is being developed by the E-Science section and used to publish many research data collections. The software is published under the Apache2 open source license on Daiquiri (Github). Daiquiri provides numerous projects with customized webservices from user management for scientific collaborations as used in the 4MOST project, supporting the active collaboration phase and later the publication of data releases such as APPLAUSE, RAVE, MUSE-Wide, Gaia@AIP . The 4MOST Public archive is currently under development.

The RDMO (Research Data Management Organiser) project has gained considerable support. By now, around 35 institutions (university libraries, the Physikalisch-Technische Bundesanstalt, Helmholtz centres, Leibniz institutes) use the software developed by the E-Science section in collaboration with KIT and FHP as a component of their data management. Originally started by AIP and FHP as a research project, funded by DFG, the Open Source based approach enabled RDMO continuation beyond DFG funding. The RDMO Arbeitsgemeinschaft brings together contributors, users and participants from all over Germany, and AIP is a signatory member of the Memorandum of Understanding. As a continuation, AIP is participating in a BMBF project on data curation and data certification (DDP Bildung).

The National Research Data Infrastructure (in original German: Nationale Forschungs-Daten Infrastruktur NFDI) has the objective to systematically index, edit, interconnect and make available the valuable stock of data from science and research. PUNCH4NFDI represents the four research topics particle physics, astrophysics, astroparticle physics, hadron and nuclear physics in the NFDI (“PUNCH” stands for “Particles, Universe, NuClei & Hadrons”). In addition to AIP and DESY, who acts as coordinator, the consortium includes 19 other funding recipients and 22 other partners from the Leibniz Association, the Helmholtz Association, the Max Planck Society and universities. The work of PUNCH4NFDI will focus on novel methods of “big data” management as well as "open data" and “open science”. At the centre of this is a “Science Data Platform”, with the help of which any scientific data in the form of digital research products can be preserved, made accessible and intelligently linked throughout their entire life cycle.

The operating system Debian Astro Pure Blend published its second major release in July 2019. The new release features more than 300 software packages suitable for astronomy, including Astropy and its ecosystem and packages for machine learning. Classic packages such as the image processing tool IRAF or the software ESO-MIDAS are also available. Debian Astro Pure Blend is mainly realised inside the E-Science section.

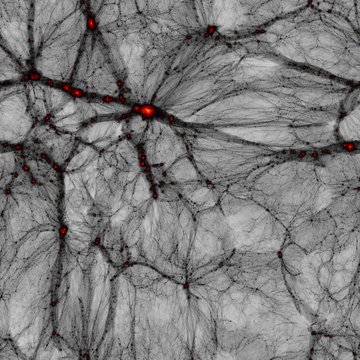

The pilot project Virtual Reality was started in 2016 and successfully used at IAU Symposium 334 in June 2017. Equipped with virtual reality glasses, participants were able to travel through a universe generated by a cosmological computer simulation. The project received a special award at the 18th Potsdam Congress Award in March 2019 for its innovative technology.